The robots.txt is a text file with instructions for search engine robots that tells them which pages they should and should not crawl. These instructions are made specific by ‘allowing’ or ‘disallowing’ the behaviour of certain – or all – bots.

Why is the robots.txt file important?

It is the first place that a search engine robot visits and it will hopefully* follow the instructions contained within the file so if you want to exclude certain pages from being visited then this is the place to do it.

*N.B. Some user agents (robots) may choose to ignore your robots.txt file. This is especially common with the more dangerous crawlers like malware robots or email address scrapers.

How does the robots.txt file work?

A search engine robot’s initial action on visiting a website is to search for a robots.txt file. If one is found, it will first read the file before proceeding with anything else and follow its rules.

The robots.txt file is always found in the website's root directory.

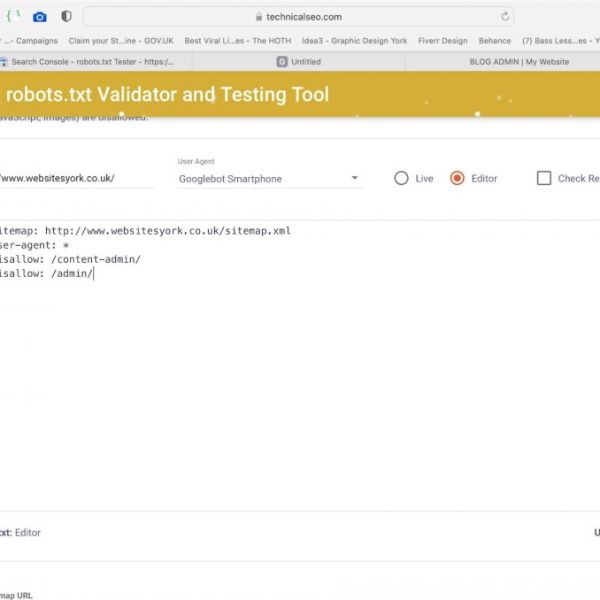

For example, for the website ‘www.websitesyork.co.uk’, you would find the robots.txt file at: ‘www.websitesyork.co.uk/robots.txt’. A robots.txt file should always be found in the root directory of your domain. Search engine crawlers will assume you do not have a robots.txt file set up if it is to be found anywhere else.

The robots.txt file syntax

A robots.txt file is made up of one or more blocks of ‘directives’ (rules), each with a specified ‘user-agent’ (search engine bot) and an ‘allow’ or ‘disallow’ instruction.

The first line of every block of directives is the ‘user-agent’ which identifies the crawler it addresses.

The second line in any block of directives is the ‘Disallow’ line. You can have multiple disallow directives that specify which parts of your site the crawler does not have access to.

An empty ‘Disallow’ line means that you are not disallowing anything – enabling a crawler to access all sections of your site. The ‘Allow’ directive allows search engines to crawl a subdirectory or specific page, even in an otherwise disallowed directory.

Examples

1.Blocking all web crawlers from all content

User-agent: * Disallow: /

2.Allowing all web crawlers access to all content

User-agent: * Disallow:

3. Blocking a specific web crawler from a specific folder

User-agent: Googlebot Disallow: /example-subfolder/